In the first part we created an basic application which is able to use compute shaders to generate images.

Raytracing in Vulkan using C# — Part 2

von Jens -

Raytracing in Vulkan using C# — Part 2

Refactoring

We created a gargantuan class “VkContext” which currently handles all Vulkan stuff. Let’s refactor this before we add any new features.

In VkContext we add the following computed properties for Vk and Device as we need it basically everywhere.

public Vk Vk => _vk;

public Device Device => _device;No we want to extract all Buffer and Image related code into their respective classes. As these will share some Allocation related code we will create a base class for it.

public abstract unsafe class Allocation : IDisposable

{

protected readonly VkContext VkContext;

protected Vk Vk => VkContext.Vk;

protected Device Device => VkContext.Device;

protected DeviceMemory Memory;

protected Allocation(VkContext vkContext) => VkContext = vkContext;

public Result MapMemory(ref void* pData) => Vk.MapMemory(Device, Memory, 0, Vk.WholeSize, 0, ref pData);

public void UnmapMemory() => Vk.UnmapMemory(Device, Memory);

public abstract void Dispose();

}Let’s start with our new VkImage class. VkImage will extend Allocation. We will copy all image related code from VkContext and Program.cs in this class and rename ist properly. We also change some details in the TransitionLayout Method and remove some parameters here and there we will not need anymore. Furthermore we want the VkImage to be able to cleanup itself.

public sealed unsafe class VkImage : Allocation

{

public readonly uint Width;

public readonly uint Height;

public readonly Format Format;

public Extent3D ImageExtent => new(Width, Height, 1);

public readonly Image Image;

private readonly ImageView _imageView;

private ImageLayout _currentLayout;

public VkImage(VkContext context, uint width, uint height, Format format, ImageUsageFlags imageUsageFlags) : base(context)

{

Width = width;

Height = height;

Format = format;

//create image

var imageInfo = new ImageCreateInfo

{

SType = StructureType.ImageCreateInfo,

ImageType = ImageType.Type2D,

Extent = new Extent3D(Width, Height, 1),

Format = Format,

Samples = SampleCountFlags.Count1Bit,

SharingMode = SharingMode.Exclusive,

InitialLayout = ImageLayout.Undefined,

Tiling = ImageTiling.Optimal,

Usage = imageUsageFlags,

MipLevels = 1,

ArrayLayers = 1

};

Vk.CreateImage(Device, imageInfo, null, out Image);

_currentLayout = ImageLayout.Undefined;

//create and bind memory

Vk.GetImageMemoryRequirements(Device, Image, out var memReq);

Memory = VkContext.AllocateMemory(memReq, MemoryPropertyFlags.DeviceLocalBit);

Vk.BindImageMemory(Device, Image, Memory, 0);

//create view

var viewInfo = new ImageViewCreateInfo

{

SType = StructureType.ImageViewCreateInfo,

Image = Image,

ViewType = ImageViewType.Type2D,

Format = Format,

SubresourceRange =

{

AspectMask = ImageAspectFlags.ColorBit,

BaseMipLevel = 0,

BaseArrayLayer = 0,

LevelCount = 1,

LayerCount = 1

}

};

Vk.CreateImageView(Device, viewInfo, null, out _imageView);

}

public DescriptorImageInfo GetImageInfo() => new()

{

ImageLayout = ImageLayout.General,

ImageView = _imageView

};

public void TransitionLayout(ImageLayout newLayout)

{

var cmd = VkContext.BeginSingleTimeCommands();

var range = new ImageSubresourceRange(ImageAspectFlags.ColorBit, 0, 1, 0, 1);

var barrierInfo = new ImageMemoryBarrier

{

SType = StructureType.ImageMemoryBarrier,

OldLayout = _currentLayout,

NewLayout = newLayout,

Image = Image,

SubresourceRange = range,

};

//determining AccessMasks and PipelineStageFlags from layouts

PipelineStageFlags srcStage;

PipelineStageFlags dstStage;

if (_currentLayout == ImageLayout.Undefined)

{

barrierInfo.SrcAccessMask = 0;

srcStage = PipelineStageFlags.TopOfPipeBit;

}

else if (_currentLayout == ImageLayout.General)

{

barrierInfo.SrcAccessMask = AccessFlags.ShaderReadBit;

srcStage = PipelineStageFlags.ComputeShaderBit;

}

else if (_currentLayout == ImageLayout.TransferSrcOptimal)

{

barrierInfo.SrcAccessMask = AccessFlags.TransferReadBit;

srcStage = PipelineStageFlags.TransferBit;

}

else if (_currentLayout == ImageLayout.TransferDstOptimal)

{

barrierInfo.SrcAccessMask = AccessFlags.TransferWriteBit;

srcStage = PipelineStageFlags.TransferBit;

}

else if (_currentLayout == ImageLayout.ShaderReadOnlyOptimal)

{

barrierInfo.SrcAccessMask = AccessFlags.ShaderReadBit;

srcStage = PipelineStageFlags.FragmentShaderBit;

}

else throw new Exception($"Currently unsupported Layout Transition from {_currentLayout} to {newLayout}");

if (newLayout == ImageLayout.TransferSrcOptimal)

{

barrierInfo.DstAccessMask = AccessFlags.TransferReadBit;

dstStage = PipelineStageFlags.TransferBit;

}

else if (newLayout == ImageLayout.TransferDstOptimal)

{

barrierInfo.DstAccessMask = AccessFlags.TransferWriteBit;

dstStage = PipelineStageFlags.TransferBit;

}

else if (newLayout == ImageLayout.ShaderReadOnlyOptimal)

{

barrierInfo.DstAccessMask = AccessFlags.ShaderReadBit;

dstStage = PipelineStageFlags.FragmentShaderBit;

}

else if (newLayout == ImageLayout.General)

{

barrierInfo.DstAccessMask = AccessFlags.ShaderReadBit;

dstStage = PipelineStageFlags.ComputeShaderBit;

}

else

throw new Exception($"Currently unsupported Layout Transition from {_currentLayout} to {newLayout}");

Vk.CmdPipelineBarrier(cmd, srcStage, dstStage, 0, 0, null, 0, null, 1, barrierInfo);

VkContext.EndSingleTimeCommands(cmd);

_currentLayout = newLayout;

}

private void CopyToBuffer(Buffer buffer)

{

//check if usable as transfer source -> transition if not

var tmpLayout = _currentLayout;

if(_currentLayout != ImageLayout.TransferSrcOptimal)

TransitionLayout(ImageLayout.TransferSrcOptimal);

var cmd = VkContext.BeginSingleTimeCommands();

var layers = new ImageSubresourceLayers(ImageAspectFlags.ColorBit, 0, 0, 1);

var copyRegion = new BufferImageCopy(0, 0, 0, layers, default, ImageExtent);

Vk.CmdCopyImageToBuffer(cmd, Image, _currentLayout, buffer, 1, copyRegion);

VkContext.EndSingleTimeCommands(cmd);

//transfer back to original layout if changed

if(_currentLayout != tmpLayout)

TransitionLayout(ImageLayout.General);

}

public void SetData(void* source)

{

var size = Width * Height * 4;

using var buffer = new VkBuffer(VkContext, size, BufferUsageFlags.TransferSrcBit,

MemoryPropertyFlags.HostVisibleBit);

//copy data using a staging buffer

void* mappedData = default;

buffer.MapMemory(ref mappedData);

System.Buffer.MemoryCopy(source, mappedData, size, size);

buffer.UnmapMemory();

var tmpLayout = _currentLayout;

if(_currentLayout != ImageLayout.TransferDstOptimal)

TransitionLayout(ImageLayout.TransferDstOptimal);

buffer.CopyToImage(this);

if(_currentLayout != tmpLayout)

TransitionLayout(tmpLayout);

}

public void CopyTo(void* destination)

{

//this is valid for R8G8B8A8 formats and permutations only

var size = Width * Height * 4;

using var buffer = new VkBuffer(VkContext, size, BufferUsageFlags.TransferDstBit,

MemoryPropertyFlags.HostVisibleBit);

CopyToBuffer(buffer.Buffer);

//copy data using a staging buffer

void* mappedData = default;

buffer.MapMemory(ref mappedData);

System.Buffer.MemoryCopy(mappedData, destination, size, size);

buffer.UnmapMemory();

}

public void Save(string destination)

{

var imageData = new uint[Width * Height];

fixed (void* pImageData = imageData)

{

CopyTo(pImageData);

//color type is hardcoded! convert if needed

var info = new SKImageInfo((int) Width, (int) Height, SKColorType.Rgba8888, SKAlphaType.Premul);

var bmp = new SKBitmap();

bmp.InstallPixels(info, (nint) pImageData, info.RowBytes);

using var fs = File.Create(destination);

bmp.Encode(fs, SKEncodedImageFormat.Png, 100);

}

}

public override void Dispose()

{

Vk.DestroyImageView(Device, _imageView, null);

Vk.FreeMemory(Device, Memory, null);

Vk.DestroyImage(Device, Image, null);

}

}We will go a similar route for the VkBuffer class.

public sealed unsafe class VkBuffer : Allocation

{

public uint Size;

public Buffer Buffer;

private BufferUsageFlags _bufferUsageFlags;

private MemoryPropertyFlags _memoryPropertyFlags;

public VkBuffer(VkContext context, uint size, BufferUsageFlags usageFlags, MemoryPropertyFlags memoryFlags) : base(context)

{

Size = size;

_bufferUsageFlags = usageFlags;

_memoryPropertyFlags = memoryFlags;

//create buffer

var bufferInfo = new BufferCreateInfo

{

SType = StructureType.BufferCreateInfo,

Usage = _bufferUsageFlags,

Size = size,

SharingMode = SharingMode.Exclusive

};

Vk.CreateBuffer(Device, bufferInfo, null, out Buffer);

//allocate and bind memory

Vk.GetBufferMemoryRequirements(Device, Buffer, out var memReq);

Memory = VkContext.AllocateMemory(memReq, _memoryPropertyFlags);

Vk.BindBufferMemory(Device, Buffer, Memory, 0);

}

public void CopyToImage(VkImage vkImage)

{

var cmd = VkContext.BeginSingleTimeCommands();

var layers = new ImageSubresourceLayers(ImageAspectFlags.ColorBit, 0, 0, 1);

var copyRegion = new BufferImageCopy(0, 0, 0, layers, default, vkImage.ImageExtent);

Vk.CmdCopyBufferToImage(cmd, Buffer, vkImage.Image, ImageLayout.TransferDstOptimal, 1, copyRegion);

VkContext.EndSingleTimeCommands(cmd);

}

public override void Dispose()

{

Vk.FreeMemory(Device, Memory, null);

Vk.DestroyBuffer(Device, Buffer, null);

}

}If we step through the code carefully we will see that it was basically a copy&paste operation. We can now delete the two AllocatedX structs we created. Now it is our task to fix all errors in Program.cs and delete obsolete code.

The result will look similar to this:

using RaytracingVulkan;

using Silk.NET.Vulkan;

using var ctx = new VkContext();

var poolSizes = new DescriptorPoolSize[] {new() {Type = DescriptorType.StorageImage, DescriptorCount = 1000}};

var descriptorPool = ctx.CreateDescriptorPool(poolSizes);

var binding = new DescriptorSetLayoutBinding

{

Binding = 0,

DescriptorCount = 1,

DescriptorType = DescriptorType.StorageImage,

StageFlags = ShaderStageFlags.ComputeBit

};

var setLayout = ctx.CreateDescriptorSetLayout(new[] {binding});

var descriptorSet = ctx.AllocateDescriptorSet(descriptorPool, setLayout);

var shaderModule = ctx.LoadShaderModule("./assets/shaders/raytracing.comp.spv");

var pipelineLayout = ctx.CreatePipelineLayout(setLayout);

var pipeline = ctx.CreateComputePipeline(pipelineLayout, shaderModule);

//image creation

var image = new VkImage(ctx, 500, 500, Format.R8G8B8A8Unorm,ImageUsageFlags.StorageBit | ImageUsageFlags.TransferDstBit | ImageUsageFlags.TransferSrcBit);

image.TransitionLayout(ImageLayout.General);

var imageInfo = image.GetImageInfo();

ctx.UpdateDescriptorSetImage(ref descriptorSet, imageInfo, DescriptorType.StorageImage, 0);

//execute compute shader

var cmd = ctx.BeginSingleTimeCommands();

ctx.BindComputePipeline(cmd, pipeline);

ctx.BindComputeDescriptorSet(cmd, descriptorSet, pipelineLayout);

ctx.Dispatch(cmd, 500/8, 500/8, 1);

ctx.EndSingleTimeCommands(cmd);

//destroy pipeline objects

ctx.DestroyDescriptorPool(descriptorPool);

ctx.DestroyDescriptorSetLayout(setLayout);

ctx.DestroyShaderModule(shaderModule);

ctx.DestroyPipelineLayout(pipelineLayout);

ctx.DestroyPipeline(pipeline);

//save image

image.Save("./render.png");

//destroy image objects

image.Dispose();The detailed changes are can be seen in this commit:

refactor image and buffer · JensKrumsieck/raytracing-vulkan@d4f7437 (github.com)

Adding an User Interface

Now we want to add an user interface to our application. We have to decide which kind of UI framework we want to use. We could use ImGui at this point, but there is a lot of code involved getting ImGui to run (see JensKrumsieck/Catalyze: Vulkan & ImGui Apps in C# using Silk.NET and ImGui.NET (github.com) for example). We will use Avalonia for this project — this may turns out to be dumb but let’s find out. I do not have any prior experience with Avalonia.

Avalonia has the concept of a WriteableBitmap which we want to write to. Let’s first to get everything up and running follow the official “Getting started”. Getting Started with Avalonia | Avalonia UI

In a terminal we write the following to install the templates and after that use our IDE to create an Avalonia App called {OurProject}.UI (“dotnet new avalonia.app -o {OurProject}.UI” if you prefer command lines).

dotnet new install Avalonia.Templates

To use the MVVM Community Toolkit we will install “Microsoft.Toolkit.Mvvm” into our fresh project.

We create a partial class “MainViewModel” to hold our logic. This needs to extend ObservableObject (coming from Microsoft.Toolkit.Mvvm.ComponentModel) and implement IDisposable. The Toolkit’s code generators will do the magic for us. 🧙♂️

public unsafe partial class MainViewModel : ObservableObject, IDisposable

{

//our logic

}We add an image to our MainWindow.axaml and specify that we are going to use MainViewModel as our DataContext. We will also create a new MainViewModel instance in our code-behind file MainWindow.axaml.cs

<Window xmlns="https://github.com/avaloniaui"

xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml"

xmlns:d="http://schemas.microsoft.com/expression/blend/2008"

xmlns:mc="http://schemas.openxmlformats.org/markup-compatibility/2006"

xmlns:viewModels="clr-namespace:RaytracingVulkan.UI.ViewModels"

mc:Ignorable="d" d:DesignWidth="800" d:DesignHeight="450"

x:Class="RaytracingVulkan.UI.MainWindow"

x:DataType="viewModels:MainViewModel"

Title="RaytracingVulkan.UI">

<Design.DataContext>

<viewModels:MainViewModel/>

</Design.DataContext>

<Image Source="{Binding Image}" x:Name="Image" Width="500" Height="500"/>

</Window>public partial class MainWindow : Window

{

private readonly MainViewModel _viewModel = new();

public MainWindow()

{

InitializeComponent();

DataContext = _viewModel;

}

}To tell Avalonia that we want the Window’s Render-method to run every frame we will override it and invalidate the visual. That is also where we will render our image. So let’s add a call to _viewModel.Render(); which we will add soon.

public override void Render(DrawingContext context)

{

base.Render(context);

_viewModel.Render();

Dispatcher.UIThread.Post(InvalidateVisual, DispatcherPriority.Render);

}We furthermore want to Dispose our MainViewModel when the Window is closing.

protectedoverridevoidOnClosing(WindowClosingEventArgs e)

{

base.OnClosing(e);

_viewModel.Dispose();

}To get the binding working we will add a private fieldwith an ObservablePropertyAttribute in our view model class. This will automatically generate a property with name “Image” for us which is used in our xaml-binding.

[ObservableProperty] private WriteableBitmap _image;We will now copy, paste and reorganize from our old Program.cs file (from Part 1).

First we create fields for all objects we used to prevent (re-)allocating them every frame.

private readonly VkContext _context = (Application.Current as App)!.VkContext;

private readonly DescriptorPool _descriptorPool;

private readonly DescriptorSet _descriptorSet;

private readonly DescriptorSetLayout _setLayout;

private readonly PipelineLayout _pipelineLayout;

private readonly Pipeline _pipeline;

private readonly CommandBuffer _cmd;

private readonly VkImage _vkImage;

private readonly VkBuffer _vkBuffer;

private readonly void* _mappedData;We use the initialization code in our constructor

public MainViewModel()

{

//pipeline creation

var poolSizes = new DescriptorPoolSize[] {new() {Type = DescriptorType.StorageImage, DescriptorCount = 1000}};

_descriptorPool = _context.CreateDescriptorPool(poolSizes);

var binding = new DescriptorSetLayoutBinding

{

Binding = 0,

DescriptorCount = 1,

DescriptorType = DescriptorType.StorageImage,

StageFlags = ShaderStageFlags.ComputeBit

};

_setLayout = _context.CreateDescriptorSetLayout(new[] {binding});

_descriptorSet = _context.AllocateDescriptorSet(_descriptorPool, _setLayout);

var shaderModule = _context.LoadShaderModule("./assets/shaders/raytracing.comp.spv");

_pipelineLayout = _context.CreatePipelineLayout(_setLayout);

_pipeline = _context.CreateComputePipeline(_pipelineLayout, shaderModule);

var sz = 2000u;

//image creation

_vkImage = new VkImage(_context, sz, sz, Format.B8G8R8A8Unorm,ImageUsageFlags.StorageBit | ImageUsageFlags.TransferDstBit | ImageUsageFlags.TransferSrcBit);

_vkImage.TransitionLayoutImmediate(ImageLayout.General);

_context.UpdateDescriptorSetImage(ref _descriptorSet, _vkImage.GetImageInfo(), DescriptorType.StorageImage, 0);

_image = new WriteableBitmap(new PixelSize((int) sz, (int) sz), new Vector(96, 96), PixelFormat.Bgra8888);

//we don't need it anymore

_context.DestroyShaderModule(shaderModule);

_cmd = _context.AllocateCommandBuffer();

_vkBuffer = new VkBuffer(_context, _vkImage.Width * _vkImage.Height * 4, BufferUsageFlags.TransferDstBit,

MemoryPropertyFlags.HostCachedBit | MemoryPropertyFlags.HostCoherentBit |

MemoryPropertyFlags.HostVisibleBit);

_vkBuffer.MapMemory(ref _mappedData);

}Take your time and compare the code with the Program.cs. You will see that it is all copied. I decided to save the staging buffer in a field to prevent reallocation and have its memory mapped permanently.

A new method “AllocateCommandBuffer” is added to our VkContext file. Which is part of the former body of “BeginSingleTimeCommands”. The same thing has been done to “EndSingleTimeCommands”. We will do similiar things in VkImage a few lines later.

public CommandBuffer AllocateCommandBuffer()

{

var allocInfo = new CommandBufferAllocateInfo

{

SType = StructureType.CommandBufferAllocateInfo,

CommandPool = _commandPool,

CommandBufferCount = 1,

Level = CommandBufferLevel.Primary

};

_vk.AllocateCommandBuffers(_device, allocInfo, out var commandBuffer);

return commandBuffer;

}

public CommandBuffer BeginSingleTimeCommands()

{

var commandBuffer = AllocateCommandBuffer();

BeginCommandBuffer(commandBuffer);

return commandBuffer;

}

public void EndCommandBuffer(CommandBuffer cmd)

{

_vk.EndCommandBuffer(cmd);

var submitInfo = new SubmitInfo

{

SType = StructureType.SubmitInfo,

CommandBufferCount = 1,

PCommandBuffers = &cmd

};

SubmitMainQueue(submitInfo, default);

}

public void EndSingleTimeCommands(CommandBuffer cmd)

{

EndCommandBuffer(cmd);

WaitForQueue();

_vk.FreeCommandBuffers(_device, _commandPool, 1, cmd);

}We now add the dispose method to our view model where — as before — we clean up all resources (ShaderModule is already cleaned after Pipeline creation)

public void Dispose()

{

_vkBuffer.UnmapMemory();

_context.DestroyDescriptorPool(_descriptorPool);

_context.DestroyDescriptorSetLayout(_setLayout);

_context.DestroyPipelineLayout(_pipelineLayout);

_context.DestroyPipeline(_pipeline);

_image.Dispose();

GC.SuppressFinalize(this);

}Now only the render method is missing in our view model class. We will start recording our CommandBuffer with transitioning the image to General. We will bind Pipeline and DescriptorSet, call the compute shader with CmdDispatch and transition the image to TransferSrcOptimal. Than the copy logic from VkImage applies in a modified fashion. As you can see i modified the TransitionLayout- and CopyToBuffer-Methods to use the global CommandBuffer.

public void Render()

{

//execute compute shader

_context.BeginCommandBuffer(_cmd);

_vkImage.TransitionLayout(_cmd, ImageLayout.General);

_context.BindComputePipeline(_cmd, _pipeline);

_context.BindComputeDescriptorSet(_cmd, _descriptorSet, _pipelineLayout);

_context.Dispatch(_cmd, _vkImage.Width/32, _vkImage.Height/32, 1);

_vkImage.TransitionLayout(_cmd, ImageLayout.TransferSrcOptimal);

_vkImage.CopyToBuffer(_cmd, _vkBuffer.Buffer);

_context.EndCommandBuffer(_cmd);

_context.WaitForQueue();

using var buffer = _image.Lock();

var size = _vkImage.Width * _vkImage.Height * 4;

System.Buffer.MemoryCopy(_mappedData, (void*) buffer.Address, size, size);

}The specify i renamed the previous implementations with a “Immediate” suffix as the CommandBuffer is submitted immediate. I also renamed our old CopyToBuffer-Method to TransitionAndCopyToBuffer as it also transitions the image’s layout.

public void TransitionLayoutImmediate(ImageLayout newLayout)

{

var cmd = VkContext.BeginSingleTimeCommands();

TransitionLayout(cmd, newLayout);

VkContext.EndSingleTimeCommands(cmd);

}

public void TransitionLayout(CommandBuffer cmd, ImageLayout newLayout)

{

var range = new ImageSubresourceRange(ImageAspectFlags.ColorBit, 0, 1, 0, 1);

var barrierInfo = new ImageMemoryBarrier

{

SType = StructureType.ImageMemoryBarrier,

OldLayout = _currentLayout,

NewLayout = newLayout,

Image = Image,

SubresourceRange = range,

};

//determining AccessMasks and PipelineStageFlags from layouts

PipelineStageFlags srcStage;

PipelineStageFlags dstStage;

if (_currentLayout == ImageLayout.Undefined)

{

barrierInfo.SrcAccessMask = 0;

srcStage = PipelineStageFlags.TopOfPipeBit;

}

else if (_currentLayout == ImageLayout.General)

{

barrierInfo.SrcAccessMask = AccessFlags.ShaderReadBit;

srcStage = PipelineStageFlags.ComputeShaderBit;

}

else if (_currentLayout == ImageLayout.TransferSrcOptimal)

{

barrierInfo.SrcAccessMask = AccessFlags.TransferReadBit;

srcStage = PipelineStageFlags.TransferBit;

}

else if (_currentLayout == ImageLayout.TransferDstOptimal)

{

barrierInfo.SrcAccessMask = AccessFlags.TransferWriteBit;

srcStage = PipelineStageFlags.TransferBit;

}

else if (_currentLayout == ImageLayout.ShaderReadOnlyOptimal)

{

barrierInfo.SrcAccessMask = AccessFlags.ShaderReadBit;

srcStage = PipelineStageFlags.FragmentShaderBit;

}

else throw new Exception($"Currently unsupported Layout Transition from {_currentLayout} to {newLayout}");

if (newLayout == ImageLayout.TransferSrcOptimal)

{

barrierInfo.DstAccessMask = AccessFlags.TransferReadBit;

dstStage = PipelineStageFlags.TransferBit;

}

else if (newLayout == ImageLayout.TransferDstOptimal)

{

barrierInfo.DstAccessMask = AccessFlags.TransferWriteBit;

dstStage = PipelineStageFlags.TransferBit;

}

else if (newLayout == ImageLayout.ShaderReadOnlyOptimal)

{

barrierInfo.DstAccessMask = AccessFlags.ShaderReadBit;

dstStage = PipelineStageFlags.FragmentShaderBit;

}

else if (newLayout == ImageLayout.General)

{

barrierInfo.DstAccessMask = AccessFlags.ShaderReadBit;

dstStage = PipelineStageFlags.ComputeShaderBit;

}

else

throw new Exception($"Currently unsupported Layout Transition from {_currentLayout} to {newLayout}");

Vk.CmdPipelineBarrier(cmd, srcStage, dstStage, 0, 0, null, 0, null, 1, barrierInfo);

_currentLayout = newLayout;

}

public void CopyToBufferImmediate(Buffer buffer)

{

//check if usable as transfer source -> transition if not

var tmpLayout = _currentLayout;

if(_currentLayout != ImageLayout.TransferSrcOptimal)

TransitionLayoutImmediate(ImageLayout.TransferSrcOptimal);

var cmd = VkContext.BeginSingleTimeCommands();

var layers = new ImageSubresourceLayers(ImageAspectFlags.ColorBit, 0, 0, 1);

var copyRegion = new BufferImageCopy(0, 0, 0, layers, default, ImageExtent);

Vk.CmdCopyImageToBuffer(cmd, Image, _currentLayout, buffer, 1, copyRegion);

VkContext.EndSingleTimeCommands(cmd);

//transfer back to original layout if changed

if(_currentLayout != tmpLayout)

TransitionLayoutImmediate(ImageLayout.General);

}

public void TransitionAndCopyToBuffer(CommandBuffer cmd, Buffer buffer)

{

//check if usable as transfer source -> transition if not

var tmpLayout = _currentLayout;

if(_currentLayout != ImageLayout.TransferSrcOptimal)

TransitionLayout(cmd, ImageLayout.TransferSrcOptimal);

CopyToBuffer(cmd, buffer);

//transfer back to original layout if changed

if(_currentLayout != tmpLayout)

TransitionLayout(cmd, ImageLayout.General);

}

public void CopyToBuffer(CommandBuffer cmd, Buffer buffer)

{

var layers = new ImageSubresourceLayers(ImageAspectFlags.ColorBit, 0, 0, 1);

var copyRegion = new BufferImageCopy(0, 0, 0, layers, default, ImageExtent);

Vk.CmdCopyImageToBuffer(cmd, Image, _currentLayout, buffer, 1, copyRegion);

}The full UI commit is available here:Part 2 UI (#1) · JensKrumsieck/raytracing-vulkan@bec642b (github.com)

I quite rushed over this part to implement some new stuff to our shader.

Adding a Camera

Next we will add a moveable camera to our renderer. At first we will create a camera class with the neccessary fields a camera needs. This camera system is based around the camera system from TheCherno’s Ray Tracing Series and therefore differs from the one Peter Shirley is using in the “RayTracing in One Weekend” series.

The biggest difference is the use of matrices instead of the calculations used in the books.

public class Camera

{

public Vector3 Position;

public Vector3 Forward;

public Matrix4x4 Projection;

public Matrix4x4 View;

public Matrix4x4 InverseProjection;

public Matrix4x4 InverseView;

}For those matrices we need to have information about the aspect ratio, field of fiev, near and far clip values of our camera. Let’s instantiate those in our constructor.

private float _viewportWidth;

private float _viewportHeight;

private float AspectRatio => _viewportWidth / _viewportHeight;

private readonly float _verticalFov;

private readonly float _nearClip;

private readonly float _farClip;

public Camera(float verticalFovDegrees, float nearClip, float farClip)

{

Forward = -Vector3.UnitZ;

Position = Vector3.Zero;

_verticalFov = verticalFovDegrees * MathF.PI / 180;

_nearClip = nearClip;

_farClip = farClip;

RecalculateProjection();

RecalculateView();

}We also call RecalculateProjection and RecalculateView to fill our 4 matrices.

private void RecalculateProjection()

{

Projection = Matrix4x4.CreatePerspectiveFieldOfView(_verticalFov, AspectRatio, _nearClip, _farClip);

Matrix4x4.Invert(Projection, out InverseProjection);

}

public void RecalculateView()

{

View = Matrix4x4.CreateLookAt(Position, Position + Forward, Vector3.UnitY);

Matrix4x4.Invert(View, out InverseView);

}As the ProjectionMatrix is dependent on the viewport size we will add an Resize-method which fills our size related fields and recalculates the matrix. And that is the whole camera class for now.

public void Resize(uint newWidth, uint newHeight)

{

_viewportWidth = newWidth;

_viewportHeight = newHeight;

RecalculateProjection();

}For UI interaction we want to wrap the camera and its position into a separate view model.

public partial class CameraViewModel : ObservableObject

{

public CameraViewModel(Camera activeCamera) => _activeCamera = activeCamera;

[ObservableProperty] private Camera _activeCamera;

public Vector3 Position

{

get => _activeCamera.Position;

set

{

_activeCamera.Position = value;

OnPropertyChanged();

OnPropertyChanged(nameof(X));

OnPropertyChanged(nameof(Y));

OnPropertyChanged(nameof(Z));

_activeCamera.RecalculateView();

}

}

public float X

{

get => Position.X;

set => Position = Position with {X = value};

}

public float Y

{

get => Position.Y;

set => Position = Position with {Y = value};

}

public float Z

{

get => Position.Z;

set => Position = Position with {Z = value};

}

}As we want our camera to be interactive we create an InputHandler class simply keeping track of pressed and released keys. The ToString-method is for debugging purposes. We use HashSets as it can not have duplicate items.

public class InputHandler

{

public HashSet<Key> PressedKeys { get; } = new();

public void Down (Key k) => PressedKeys.Add(k);

public void Up (Key k) => PressedKeys.Remove(k);

public override string ToString() => string.Join(", ", PressedKeys);

}As our approach to invalidate the whole window every frame is quite intrusive we ant to use the compositor for that.

In our code-behind class of our window we wrap all initializiation code in the Initialize-Method and handle everything from there.

- We set _isInitialized to true

- We set up a SizeChanged event for our image

- We get the compositor

- And call UpdateFrame

In UpdateFrame we check if a frame is requested and we are ready to render and call the view models Render method. Than we RequestCompositionUpdate which calls UpdateFrame again. We still use InvalidateVisual for our image.

The resize method calls a yet to be written Resize-Method in the view model class.

public partial class MainWindow : Window

{

private readonly MainViewModel _viewModel;

private readonly InputHandler _input = new();

private Compositor? _compositor;

private bool _isInitialized;

private bool _updateRequested;

public MainWindow()

{

InitializeComponent();

DataContext = _viewModel = new MainViewModel(_input);

Initialize();

}

private void Initialize()

{

_isInitialized = true;

Image.SizeChanged += Resize;

var selfVisual = ElementComposition.GetElementVisual(this)!;

_compositor = selfVisual.Compositor;

UpdateFrame();

}

private void UpdateFrame()

{

_updateRequested = false;

if (_updateRequested || !_isInitialized) return;

_viewModel.Render();

_compositor?.RequestCompositionUpdate(UpdateFrame);

Dispatcher.UIThread.Post(Image.InvalidateVisual, DispatcherPriority.Render);

}

private void Resize(object? sender, SizeChangedEventArgs e) => _viewModel.Resize((uint) e.NewSize.Width, (uint) e.NewSize.Height);

// other code

}For our input handler we override OnKeyDown as well as OnKeyUp and call the Up and Down methods of our handler.

protected override void OnKeyDown(KeyEventArgs e)

{

base.OnKeyDown(e);

_input.Down(e.Key);

}

protected override void OnKeyUp(KeyEventArgs e)

{

base.OnKeyDown(e);

_input.Up(e.Key);

}I also updated the Close and Closing Methods to first stop rendering in OnClosing and Dispose im OnClosed.

protected override void OnClosing(WindowClosingEventArgs e)

{

_isInitialized = false;

Image.SizeChanged -= Resize;

base.OnClosing(e);

}

protected override void OnClosed(EventArgs e)

{

_viewModel.Dispose();

base.OnClosed(e);

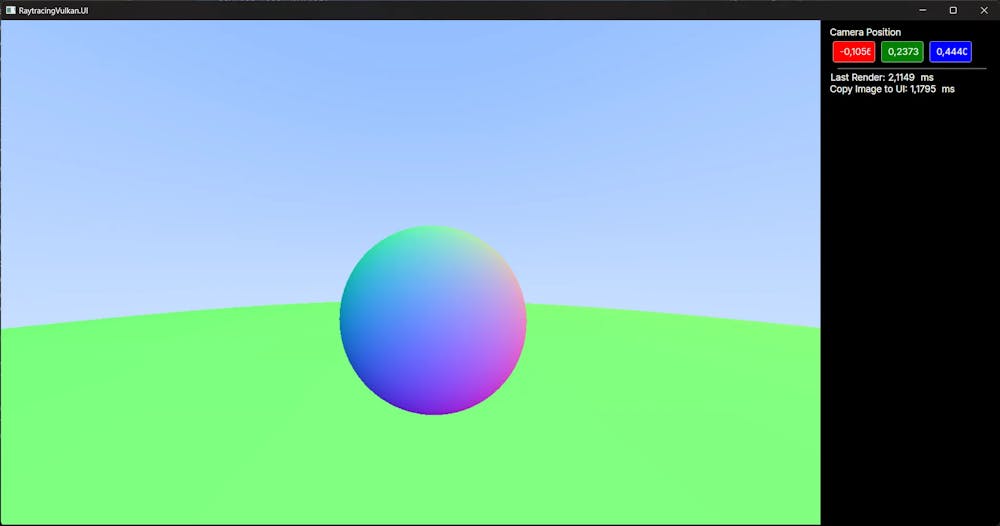

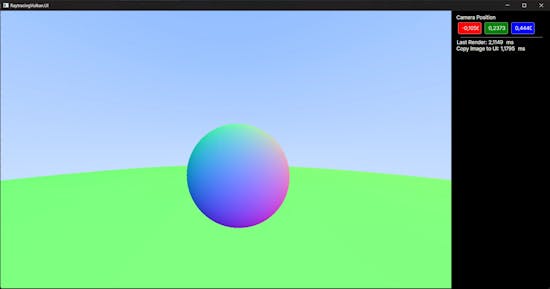

}In the axaml file we create a DockPanel to have the image with a sidebar on the right side. This contains control to set the camera’s position and displays render- and copy time.

<Window xmlns="https://github.com/avaloniaui"

xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml"

xmlns:d="http://schemas.microsoft.com/expression/blend/2008"

xmlns:mc="http://schemas.openxmlformats.org/markup-compatibility/2006"

xmlns:viewModels="clr-namespace:RaytracingVulkan.UI.ViewModels"

mc:Ignorable="d" d:DesignWidth="800" d:DesignHeight="450"

x:Class="RaytracingVulkan.UI.MainWindow"

x:DataType="viewModels:MainViewModel"

Title="RaytracingVulkan.UI">

<Design.DataContext>

<viewModels:MainViewModel/>

</Design.DataContext>

<DockPanel>

<StackPanel DockPanel.Dock="Right" Width="250" Margin="10">

<TextBlock>Camera Position</TextBlock>

<StackPanel Orientation="Horizontal">

<NumericUpDown Background="Red" Value="{Binding CameraViewModel.X}" Increment="0.01" ShowButtonSpinner="False" Width="50" Margin="5"/>

<NumericUpDown Background="Green" Text="{Binding CameraViewModel.Y}" Increment="0.01" ShowButtonSpinner="False" Width="50" Margin="5"/>

<NumericUpDown Background="Blue" Text="{Binding CameraViewModel.Z}" Increment="0.01" ShowButtonSpinner="False" Width="50" Margin="5"/>

</StackPanel>

<Separator/>

<StackPanel Orientation="Horizontal">

<TextBlock Text="Last Render: "/>

<TextBlock Text="{Binding FrameTime}" />

<TextBlock Text=" ms"/>

</StackPanel>

<StackPanel Orientation="Horizontal">

<TextBlock Text="Copy Image to UI: "/>

<TextBlock Text="{Binding IoTime}" />

<TextBlock Text=" ms"/>

</StackPanel>

</StackPanel>

<Image Source="{Binding Image}" x:Name="Image" Margin="0" Stretch="Fill" StretchDirection="Both"/>

</DockPanel>

</Window>To save rendering time we want our buffer to be always mapped so we add the UnmapMemory call to its Dispose Method. Furthermore we will need to send the camera’s info to our shader. We will use UniformBuffers for that. As our VkImage class our VkBuffer class will need to return a Descriptor_Info struct.

public DescriptorBufferInfo GetBufferInfo() => new()

{

Buffer = Buffer,

Offset = 0,

Range = Size

};In our main view model: Let’s add two stopwatches and observable fields to write their data to. We use them to get the deltaTime for our input handler and to diagnose our renderer. We also need to add a field to a CameraViewModel and our InputHandler.

//observables

[ObservableProperty] private WriteableBitmap _image;

[ObservableProperty] private CameraViewModel _cameraViewModel;

[ObservableProperty] private float _frameTime;

[ObservableProperty] private float _ioTime;

//camera and input

private Camera ActiveCamera => _cameraViewModel.ActiveCamera;

private readonly InputHandler _input;

//stopwatches

private readonly Stopwatch _frameTimeStopWatch = new();

private readonly Stopwatch _ioStopWatch = new();I also cleaned up the other fields a bit, for details you can look in the linked repository 😉

We need a second VkBuffer object which holds our SceneParameters.

//image and buffers

private VkImage? _vkImage;

private VkBuffer? _vkBuffer;

private readonly VkBuffer _sceneParameterBuffer;

//pointers

private void* _mappedData;

private readonly void* _mappedSceneParameterData;In the constructor which now accepts an InputHandler as parameter we wire up the fields as before and create a new CameraViewModel. To use UniformBuffers we need to add a poolsize for this descriptor and also set it up in our DescriptorSetLayout.

Do not forget to Dispose the new buffer object.

public MainViewModel(InputHandler input)

{

_input = input;

_cameraViewModel = new CameraViewModel(new Camera(90, 0.1f, 1000f));

//pipeline creation

var poolSizes = new DescriptorPoolSize[]

{

new() {Type = DescriptorType.StorageImage, DescriptorCount = 1000},

new() {Type = DescriptorType.UniformBuffer, DescriptorCount = 1000}

};

_descriptorPool = _context.CreateDescriptorPool(poolSizes);

//our old binding has been renamed

var binding0 = new DescriptorSetLayoutBinding

{

Binding = 0,

DescriptorCount = 1,

DescriptorType = DescriptorType.StorageImage,

StageFlags = ShaderStageFlags.ComputeBit

};

var binding1 = new DescriptorSetLayoutBinding

{

Binding = 1,

DescriptorCount = 1,

DescriptorType = DescriptorType.UniformBuffer,

StageFlags = ShaderStageFlags.ComputeBit

};

//other code

_sceneParameterBuffer = new VkBuffer(_context, (uint) sizeof(SceneParameters), BufferUsageFlags.UniformBufferBit, MemoryPropertyFlags.HostVisibleBit | MemoryPropertyFlags.HostCoherentBit);

_sceneParameterBuffer.MapMemory(ref _mappedSceneParameterData);

_context.UpdateDescriptorSetBuffer(ref _descriptorSet, _sceneParameterBuffer.GetBufferInfo(), DescriptorType.UniformBuffer, 1);

}We need to implement UpdateDescriptorSetBuffer in our VkContext class which is straight forward.

public void UpdateDescriptorSetBuffer(ref DescriptorSet set, DescriptorBufferInfo bufferInfo, DescriptorType type,

uint binding)

{

var write = new WriteDescriptorSet

{

SType = StructureType.WriteDescriptorSet,

DstSet = set,

DstBinding = binding,

DstArrayElement = 0,

DescriptorCount = 1,

PBufferInfo = &bufferInfo,

DescriptorType = type

};

_vk.UpdateDescriptorSets(_device, 1, &write, 0, default);

}In our Resize-method we recreate all objects that depend on image size by first disposing the old ones. The WriteableBitmap, the StagingBuffer and the VkImage itself. We also call the camera’s resize method.

public void Resize(uint x, uint y)

{

_vkImage?.Dispose();

_vkImage = new VkImage(_context, x, y, Format.B8G8R8A8Unorm,ImageUsageFlags.StorageBit | ImageUsageFlags.TransferDstBit | ImageUsageFlags.TransferSrcBit);

_vkImage.TransitionLayoutImmediate(ImageLayout.General);

_context.UpdateDescriptorSetImage(ref _descriptorSet, _vkImage.GetImageInfo(), DescriptorType.StorageImage, 0);

//save old image, disposing before will kill app

var tmp = _image;

_image = new WriteableBitmap(new PixelSize((int) x, (int) y), new Vector(96, 96), PixelFormat.Bgra8888);

OnPropertyChanged(nameof(Image));

//dispose old

tmp?.Dispose();

_vkBuffer?.Dispose();

_vkBuffer = new VkBuffer(_context, _vkImage.Width * _vkImage.Height * 4, BufferUsageFlags.TransferDstBit,

MemoryPropertyFlags.HostCachedBit | MemoryPropertyFlags.HostCoherentBit |

MemoryPropertyFlags.HostVisibleBit);

_vkBuffer.MapMemory(ref _mappedData);

ActiveCamera.Resize(x, y);

}We can safely remove all we also have in our constructor from the constructor, except from creating the WriteableBitmap.

Let’s cleanup the Render-Method and wire up the stop watches.

public void Render()

{

if(_vkImage is null) return;

_frameTimeStopWatch.Start();

HandleInput(FrameTime / 1000f);

UpdateSceneParameters();

RenderImage();

_ioStopWatch.Start();

CopyImageToHost();

_ioStopWatch.Stop();

IoTime = (float) _ioStopWatch.Elapsed.TotalMilliseconds;

_ioStopWatch.Reset();

_frameTimeStopWatch.Stop();

FrameTime = (float) _frameTimeStopWatch.Elapsed.TotalMilliseconds;

_frameTimeStopWatch.Reset();

}In UpdateSceneParameters we will update the UniformBuffer’s contents. We need to add a struct containing all data we want to send.

[StructLayout(LayoutKind.Sequential)]

public struct SceneParameters

{

public Matrix4x4 CameraProjection;

public Matrix4x4 InverseCameraProjection;

public Matrix4x4 CameraView;

public Matrix4x4 InverseCameraView;

}As our buffer is permanently mapped — also called persistent mapping — we simple need to copy our values into the buffer via MemoryCopy.

private void UpdateSceneParameters()

{

//update ubo

var parameters = new SceneParameters

{

CameraProjection = ActiveCamera.Projection,

InverseCameraProjection = ActiveCamera.InverseProjection,

CameraView = ActiveCamera.View,

InverseCameraView = ActiveCamera.InverseView

};

System.Buffer.MemoryCopy(¶meters, _mappedSceneParameterData, sizeof(SceneParameters), sizeof(SceneParameters));

}The HandleInput-Method checks which buttons are pressed, moves the camera accordingly and recalculates the view matrix.

private void HandleInput(float deltaTime)

{

//camera move

var speed = 5f * deltaTime;

var right = Vector3.Cross(ActiveCamera.Forward, Vector3.UnitY);

var moved = false;

var moveVector = Vector3.Zero;

if (_input.PressedKeys.Contains(Key.W))

{

moveVector += ActiveCamera.Forward;

moved = true;

}

if (_input.PressedKeys.Contains(Key.S))

{

moveVector -= ActiveCamera.Forward;

moved = true;

}

if (_input.PressedKeys.Contains(Key.D))

{

moveVector += right;

moved = true;

}

if (_input.PressedKeys.Contains(Key.A))

{

moveVector -= right;

moved = true;

}

if (_input.PressedKeys.Contains(Key.Q))

{

moveVector += Vector3.UnitY;

moved = true;

}

if (_input.PressedKeys.Contains(Key.E))

{

moveVector -= Vector3.UnitY;

moved = true;

}

if (!moved) return;

if(moveVector.Length() == 0) return;

moveVector = Vector3.Normalize(moveVector) * speed;

_cameraViewModel.Position += moveVector;

ActiveCamera.RecalculateView();

}RenderImage and CopyImageToHost contain the code we had in Render before.

private void RenderImage()

{

//execute compute shader

_context.BeginCommandBuffer(_cmd);

_vkImage!.TransitionLayout(_cmd, ImageLayout.General);

_context.BindComputePipeline(_cmd, _pipeline);

_context.BindComputeDescriptorSet(_cmd, _descriptorSet, _pipelineLayout);

_context.Dispatch(_cmd, _vkImage.Width/32, _vkImage.Height/32, 1);

_vkImage.TransitionLayout(_cmd, ImageLayout.TransferSrcOptimal);

_vkImage.CopyToBuffer(_cmd, _vkBuffer!.Buffer);

_context.EndCommandBuffer(_cmd);

_context.WaitForQueue();

}

private void CopyImageToHost()

{

using var buffer = _image.Lock();

var size = _vkImage!.Width * _vkImage.Height * 4;

System.Buffer.MemoryCopy(_mappedData, (void*) buffer.Address, size, size);

}We are ready to alter our compute shader.

Extending our Compute Shader

At first we need to fix an error we did in the first episode.

The following line is wrong (look at parenthesis):

float t = (-b - sqrt(discriminant) / (2 * a));This is the correct version:

float t = (-b - sqrt(discriminant)) / (2.0 * a);

We also need to fix the calculation of screen space coordinates in our main-Function.

vec2 imageSize = vec2(imageSize(resultImage));

//calculate uv coords

vec2 uv = (gl_GlobalInvocationID.xy + 0.5) / imageSize.xy; //ndc space

uv.x = uv.x * 2 - 1; //screen space x

uv.y = 1 - uv.y * 2; //screen space yTo use the data from our UniformBuffer we need to tell the shader how to find it and what content to expect — similar to the image2D.

layout(binding = 1, std140) uniform SceneData{

mat4 camProj;

mat4 invCamProj;

mat4 camView;

mat4 invCamView;

vec3 color;

} sceneData;We also add a payload struct to keep track of hit point, normal and distance information.

struct payload {

vec3 hitPoint;

vec3 normal;

float hitDistance;

};We will populate such a struct in our HitSphere-Method

bool hitSphere(sphere sphere, ray ray, inout payload payload)

{

//solve sphere equation

vec3 origin = ray.origin - sphere.center;

float a = dot(ray.direction, ray.direction);

float b = 2 * dot(origin, ray.direction);

float c = dot(origin, origin) - sphere.radius * sphere.radius;

float discriminant = b * b - 4 * a * c;

if (discriminant < 0) return false;

//calculate hitpoint and normal

float t = (-b - sqrt(discriminant)) / (2.0 * a);

if (t <= 0.001) return false;

payload.hitDistance = t;

vec3 hitPoint = ray.origin + t * ray.direction;

payload.hitPoint = hitPoint;

vec3 normal = normalize(hitPoint - sphere.center);

if(dot(normal, ray.direction) >= 0) normal = -1 * normal;

payload.normal = normal;

return true;

}In our main we will calculate the ray’s direction and origin for our given coordinates:

vec3 dir = GetRayDirection(uv);

vec3 origin = sceneData.invCamView[3].xyz;

ray ray = ray(origin, dir);

vec3 color = PerPixel(ray);

color = clamp(color, 0, 1);The GetRayDirection-function is based upon TheCherno’s Camera and uses the matrices to calculate the direction based on screen coordinates.

vec3 GetRayDirection(vec2 coord)

{

vec4 target = sceneData.invCamProj * vec4(coord.xy, 1, 1);

vec3 dir = vec3(sceneData.invCamView * vec4(normalize(vec3(target.xyz / target.w)), 0));

return normalize(dir);

}In our PerPixel-Method we add a for loop to deal with an array of sphere objects. We also want to keep track of the closest hit and output the gradient from the “RayTracing in One Weekend” book if nothing is hit. The code should be more or less equal to what you will find in the book.

vec3 PerPixel(ray ray)

{

sphere spheres[2];

spheres[0] = sphere(vec3(0,0, -1), -0.5);

spheres[1] = sphere(vec3(0,-100.5, -1), 100);

payload localPayload;

payload payload;

bool hit = false;

float closestT = 1/0; //divide by zero is inifinity in glsl

for(int i = 0; i < spheres.length(); i++)

{

if (hitSphere(spheres[i], ray, localPayload))

{

hit = true;

if (localPayload.hitDistance < closestT)

{

closestT = localPayload.hitDistance;

payload = localPayload;

}

}

}

if (hit) return 0.5 * (payload.normal + vec3(1));

vec3 unitDir = normalize(ray.direction);

float a = 0.5 * (unitDir.y + 1.0);

return (1.0 - a * vec3(1) + a * vec3(0.5, 0.7, 1.0));

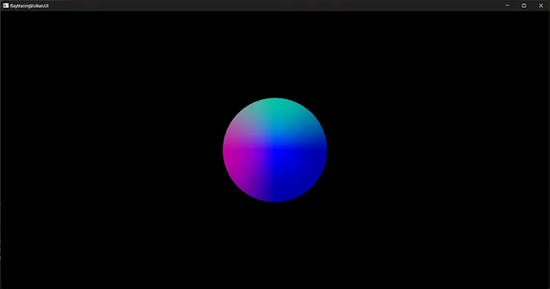

}If we run the program we have an interactable camera which two spheres rendered in front of a blue gradient all contained in an Avalonia application using Vulkan compute shaders. I think that’s enough for today.

You can find the final code for Part 2 in the part-2 branch.

JensKrumsieck/raytracing-vulkan at Part-2 (github.com)

Here is a link to the full changelog Comparing Part-1…Part-2 · JensKrumsieck/raytracing-vulkan (github.com)

Resources:

This was originally posted on medium: Raytracing in Vulkan using C# — Part 2 | by Jens Krumsieck | Sep, 2023 | Medium

Über Jens

Hi! Ich bin Jens, Doktor der Naturwissenschaften! Als Doktorand in Anorganischer Chemie an der Technischen Universität Braunschweig erforschte ich in der Arbeitsgruppe von Prof. Dr. Martin Bröring die faszinierende Welt der Porphyrinoide. Diese Strukturen, inspiriert von der Natur, stehen hinter lebenswichtigen Molekülen wie Häm, dem roten Blutfarbstoff, und Chlorophyll, dem grünen Pflanzenfarbstoff. Neben der Wissenschaft gehört die Softwareentwicklung zu meinen Interessen. Meine Reise begann mit einer frühen Faszination für die Softwareentwicklung, die in der Schule mit dem programmierbaren Taschenrechner geweckt wurde. In meiner Zeit als Doktorand kombinierte ich meine Forschung mit der Entwicklung von Software, um wissenschaftliche Erkenntnisse voranzutreiben.

![[object Object]](https://images.prismic.io/jenskrumsieck/65b8f7df615e73009ec41171_PXL_20240126_130333113_.jpg?auto=format%2Ccompress&rect=407%2C0%2C2259%2C2259&w=2666&h=2666)