Raytracing in Vulkan using C# — Part 3

Raytracing series is back! This part will be rather short and creates a base for the next parts of this series. We will implement random numbers, lambertian shading, accumulation texture and will do a little refactoring.

Random numbers

As we may want to have small shader files without long spaghetti code we will use #include in the shaders.

In raytracing.comp we add a #extension and #include line which will tell our shader compiler glslc to basically copy and paste the code of random.glsl at this point.

#extension GL_GOOGLE_include_directive : require

#include "./include/random.glsl"

To exclude these files from compilation we tell the ShaderDir variable in our csproj-File to not use files in sub-directories like “include”.

<ShaderDir Include="$(ProjectDir)\assets\shaders\*" />

The random.glsl file looks as follows:

uint gState = 78213298;

uint pcg_hash() {

uint state = gState * uint(747796405) + uint(2891336453);

uint word = ((state >> ((state >> 28) + 4)) ^ state) * uint(277803737);

return (word >> 22) ^ word;

}

float hash1() {

gState = pcg_hash();

return float(gState) / float(uint(0xffffffff));

}

vec3 hash3() {

return vec3(hash1(), hash1(), hash1());

}

float hash1(float min, float max) {

return hash1() * (max - min) + min;

}

vec3 hash3(float min, float max) {

return hash3() * (max - min) + min;

}

vec3 inUnitSphere(){

while(true) {

vec3 p = hash3(-1, 1);

if(dot(p,p) < 1) return p;

}

}

vec3 randomUnitVector() {

return normalize(inUnitSphere());

}

In our main()-function we initialize the gState variable and use it to slightly alter the ray directions which leads to anti-aliasing.

gState *= floatBitsToUint(uv.y * uv.x / 1000) * gl_GlobalInvocationID.x * sceneData.frameIndex;

vec3 origin = sceneData.invCamView[3].xyz;

uint samplesPerPixel = 10;

float influence = 0.0025;

vec3 frameColor = vec3(0);

for(int i = 0; i < samplesPerPixel; i++) {

vec3 dir = GetRayDirection(uv) + influence * hash3();

ray ray = ray(origin, dir);

frameColor += PerPixel(ray);

}

frameColor /= samplesPerPixel;

A few lines above we alter the sceneData-Ubo object to accept a frameIndex of type uint which we need to add in the C# side as well (not shown in detail here). Our frameIndex will start at 1 to avoid multiplying or dividing by 0.

layout(binding = 1, std140) uniform SceneData{

mat4 camProj;

mat4 invCamProj;

mat4 camView;

mat4 invCamView;

uint frameIndex;

} sceneData;

We also add a “constants.glsl” file to hold some constant values like infinty or PI and include it like the “random.glsl” file. Infinity in glsl is declared by dividing a number by 0.

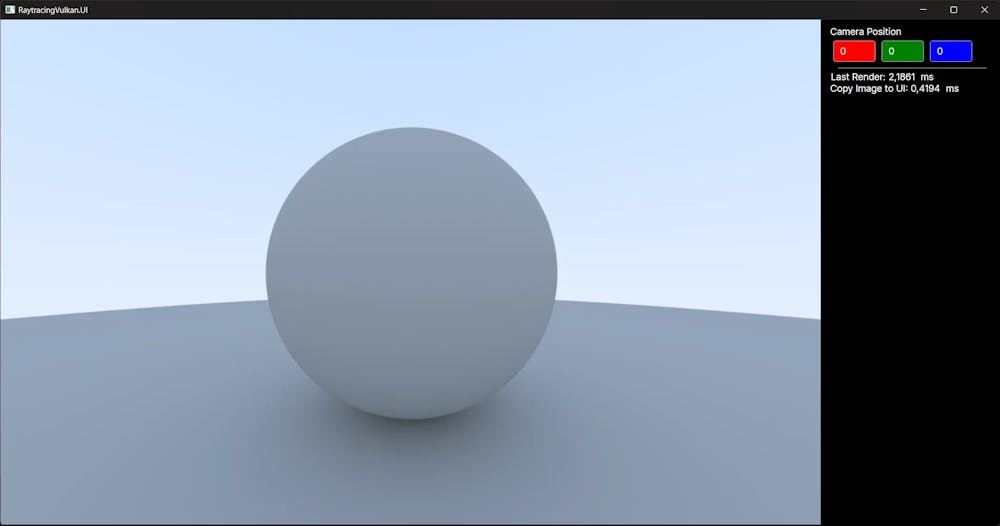

Lambertian shading

To make our sphere look nicer we add lambertian shading. For this we have to bounce around each ray — which is usually done by recursion. Shaders don’t like recursion, thats why we will use a simple for loop. We alter the PerPixel-function of our compute shader to look like this.

vec3 PerPixel(ray ray)

{

sphere spheres[2];

spheres[0] = sphere(vec3(0,0, -1), -0.5);

spheres[1] = sphere(vec3(0,-100.5, -1), 100);

uint maxBounces = 10;

vec3 finalColor = vec3(0);

vec3 color = vec3(1);

for(int d = 0; d < maxBounces; d++) {

payload localPayload;

payload payload;

bool hit = false;

float closestT = infinity;

for(int i = 0; i < spheres.length(); i++)

{

if (hitSphere(spheres[i], ray, localPayload))

{

hit = true;

if (localPayload.hitDistance < closestT)

{

closestT = localPayload.hitDistance;

payload = localPayload;

}

}

}

if (hit) {

color *= 0.5;

ray.origin = payload.hitPoint + payload.normal * 0.001;

ray.direction = payload.normal + randomUnitVector();

}

else {

vec3 unitDir = normalize(ray.direction);

float a = 0.5 * (unitDir.y + 1.0);

vec3 skyColor = (1.0 - a * vec3(1) + a * vec3(0.5, 0.7, 1.0));

finalColor = color * skyColor;

break;

}

}

return finalColor;

}

Accumulation Texture and Renderer Refactoring

Currently our MainViewModel is used to render our image. We want to refactor out all rendering code in a separate class. We also want to use a texture to accumulate color data over multiple frames. Let’s do that first.

In our MainViewModel we set up a second image in our DescriptorSetLayout on binding 2, which we need to initialize wherever we change the display texture (_vkImage). This texture however needs to be in a different format. We want to use a 32-bit float (R32G32B32A32Sfloat) for each color value as we do not want to reach the 8-bit limit of B8G8R8A8Unorm.

_accumulationTexture = new VkImage(_context, _viewportWidth, _viewportHeight, Format.R32G32B32A32Sfloat,ImageUsageFlags.StorageBit | ImageUsageFlags.TransferDstBit | ImageUsageFlags.TransferSrcBit);

_accumulationTexture.TransitionLayoutImmediate(ImageLayout.General);

The binding in our shader looks like this: (notice rgba32f)

layout(binding = 2, rgba32f) uniform image2D accumulationTexture;

All we have to do now is load the existing accumulation texture and get the current value for each pixel. Add the frame’s color and save the accumulation texture. Write the sum divided by the frameindex in our display texture.

vec3 currentColor = imageLoad(accumulationTexture, ivec2(gl_GlobalInvocationID.xy)).rgb;

currentColor += frameColor;

imageStore(accumulationTexture, ivec2(gl_GlobalInvocationID.xy), vec4(currentColor, 1));

vec3 color = currentColor.rgb / sceneData.frameIndex;

color = gamma_correction(color);

color = clamp(color, 0, 1);

imageStore(resultImage, ivec2(gl_GlobalInvocationID.xy), vec4(color, 1));

Note: color.glsl was also added and included

float gamma_correction(float linearComponent){

return pow(linearComponent, 1.0/2.2);

}

vec3 gamma_correction(vec3 linearColor)

{

return vec3(

gamma_correction(linearColor.x),

gamma_correction(linearColor.y),

gamma_correction(linearColor.z));

}

We now refactor all rendering stuff out into a renderer class.

using Silk.NET.Vulkan;

namespace RaytracingVulkan;

public sealed unsafe class Renderer : IDisposable

{

private readonly VkContext _context;

private readonly CommandBuffer _cmd;

private readonly DescriptorPool _descriptorPool;

private DescriptorSet _descriptorSet;

private readonly DescriptorSetLayout _setLayout;

private readonly PipelineLayout _pipelineLayout;

private readonly Pipeline _pipeline;

private VkImage? _vkImage;

private VkImage? _accumulationTexture;

private VkBuffer? _vkBuffer;

private readonly VkBuffer _sceneParameterBuffer;

private void* _mappedData;

private readonly void* _mappedSceneParameterData;

private uint _viewportWidth;

private uint _viewportHeight;

private uint _frameIndex = 1;

public bool IsReady;

public Renderer(VkContext context)

{

_context = context;

var poolSizes = new DescriptorPoolSize[]

{

new() {Type = DescriptorType.StorageImage, DescriptorCount = 1000},

new() {Type = DescriptorType.UniformBuffer, DescriptorCount = 1000}

};

_descriptorPool = _context.CreateDescriptorPool(poolSizes);

var binding0 = new DescriptorSetLayoutBinding

{

Binding = 0,

DescriptorCount = 1,

DescriptorType = DescriptorType.StorageImage,

StageFlags = ShaderStageFlags.ComputeBit

};

var binding1 = new DescriptorSetLayoutBinding

{

Binding = 1,

DescriptorCount = 1,

DescriptorType = DescriptorType.UniformBuffer,

StageFlags = ShaderStageFlags.ComputeBit

};

var binding2 = binding0 with {Binding = 2};

_setLayout = _context.CreateDescriptorSetLayout(new[] {binding0, binding1, binding2});

_descriptorSet = _context.AllocateDescriptorSet(_descriptorPool, _setLayout);

var shaderModule = _context.LoadShaderModule("./assets/shaders/raytracing.comp.spv");

_pipelineLayout = _context.CreatePipelineLayout(_setLayout);

_pipeline = _context.CreateComputePipeline(_pipelineLayout, shaderModule);

_sceneParameterBuffer = new VkBuffer(_context, (uint) sizeof(SceneParameters), BufferUsageFlags.UniformBufferBit, MemoryPropertyFlags.HostVisibleBit | MemoryPropertyFlags.HostCoherentBit);

_sceneParameterBuffer.MapMemory(ref _mappedSceneParameterData);

_context.UpdateDescriptorSetBuffer(ref _descriptorSet, _sceneParameterBuffer.GetBufferInfo(), DescriptorType.UniformBuffer, 1);

_context.DestroyShaderModule(shaderModule);

_cmd = _context.AllocateCommandBuffer();

}

public void Render(Camera camera)

{

UpdateSceneParameters(camera);

RenderImage();

_frameIndex++;

}

public void Resize(uint x, uint y)

{

_viewportWidth = x;

_viewportHeight = y;

}

private void RenderImage()

{

_context.BeginCommandBuffer(_cmd);

_vkImage!.TransitionLayout(_cmd, ImageLayout.General);

_context.BindComputePipeline(_cmd, _pipeline);

_context.BindComputeDescriptorSet(_cmd, _descriptorSet, _pipelineLayout);

_context.Dispatch(_cmd, _vkImage.Width/32, _vkImage.Height/32, 1);

_vkImage.TransitionLayout(_cmd, ImageLayout.TransferSrcOptimal);

_vkImage.CopyToBuffer(_cmd, _vkBuffer!.Buffer);

_context.EndCommandBuffer(_cmd);

_context.WaitForQueue();

}

private void UpdateSceneParameters(Camera camera)

{

var parameters = new SceneParameters

{

CameraProjection = camera.Projection,

InverseCameraProjection = camera.InverseProjection,

CameraView = camera.View,

InverseCameraView = camera.InverseView,

FrameIndex = _frameIndex

};

System.Buffer.MemoryCopy(¶meters, _mappedSceneParameterData, sizeof(SceneParameters), sizeof(SceneParameters));

}

public void CopyDataTo(IntPtr address)

{

var size = _viewportWidth * _viewportHeight * 4;

System.Buffer.MemoryCopy(_mappedData, address.ToPointer(), size, size);

}

public void Reset()

{

IsReady = false;

_vkImage?.Dispose();

_vkImage = new VkImage(_context, _viewportWidth, _viewportHeight, Format.B8G8R8A8Unorm,ImageUsageFlags.StorageBit | ImageUsageFlags.TransferDstBit | ImageUsageFlags.TransferSrcBit);

_vkImage.TransitionLayoutImmediate(ImageLayout.General);

_context.UpdateDescriptorSetImage(ref _descriptorSet, _vkImage.GetImageInfo(), DescriptorType.StorageImage, 0);

_accumulationTexture?.Dispose();

_accumulationTexture = new VkImage(_context, _viewportWidth, _viewportHeight, Format.R32G32B32A32Sfloat,ImageUsageFlags.StorageBit | ImageUsageFlags.TransferDstBit | ImageUsageFlags.TransferSrcBit);

_accumulationTexture.TransitionLayoutImmediate(ImageLayout.General);

_context.UpdateDescriptorSetImage(ref _descriptorSet, _accumulationTexture.GetImageInfo(), DescriptorType.StorageImage, 2);

_frameIndex = 1;

_vkBuffer?.Dispose();

_vkBuffer = new VkBuffer(_context, _vkImage.Width * _vkImage.Height * 4, BufferUsageFlags.TransferDstBit,

MemoryPropertyFlags.HostCachedBit | MemoryPropertyFlags.HostCoherentBit |

MemoryPropertyFlags.HostVisibleBit);

_vkBuffer.MapMemory(ref _mappedData);

IsReady = true;

}

public void Dispose()

{

IsReady = false;

_context.WaitIdle();

_sceneParameterBuffer.Dispose();

_vkBuffer?.Dispose();

_vkImage?.Dispose();

_context.DestroyDescriptorPool(_descriptorPool);

_context.DestroyDescriptorSetLayout(_setLayout);

_context.DestroyPipelineLayout(_pipelineLayout);

_context.DestroyPipeline(_pipeline);

}

}

The code for this part is located here:

JensKrumsieck/raytracing-vulkan at Part-3 (github.com)

The diff from part 2 is available here:

Comparing Part-2…Part-3 · JensKrumsieck/raytracing-vulkan (github.com)

This was originally posted on medium: Raytracing in Vulkan using C# — Part 3 | by Jens Krumsieck | Sep, 2023 | Medium

VulkanC#RenderingRaytracingSilk.NETAvaloniaPathtracingBildsyntheseDevlogProgramming

![[object Object]](https://images.prismic.io/jenskrumsieck/65b8f7df615e73009ec41171_PXL_20240126_130333113_.jpg?auto=format%2Ccompress&rect=407%2C0%2C2259%2C2259&w=2666&h=2666)